Artificial intelligence & robotics

Pranav Rajpurkar

Developed a way for AI to teach itself to accurately interpret medical images.

Global

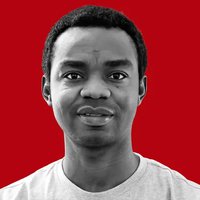

Daniel Omeiza

Working to solve the explainability problem in self-driving cars.

China

Xiang Wang

Multimodal AI-for-Science large models, enabling the large models to reliably understand and generate chemical molecules.

Europe

Akhilesh Goveas

Akhilesh Goveas is the founder and CEO of SpectX

Global

Richard Zhang

Invented the visual similarity algorithms underlying image-generating AI models.