Artificial intelligence & robotics

Manuel Le Gallo

He uses novel computer designs to make AI less power hungry.

Global

Miguel Modestino

He is reducing the chemical industry’s carbon footprint by using AI to optimize reactions with electricity instead of heat.

Latin America

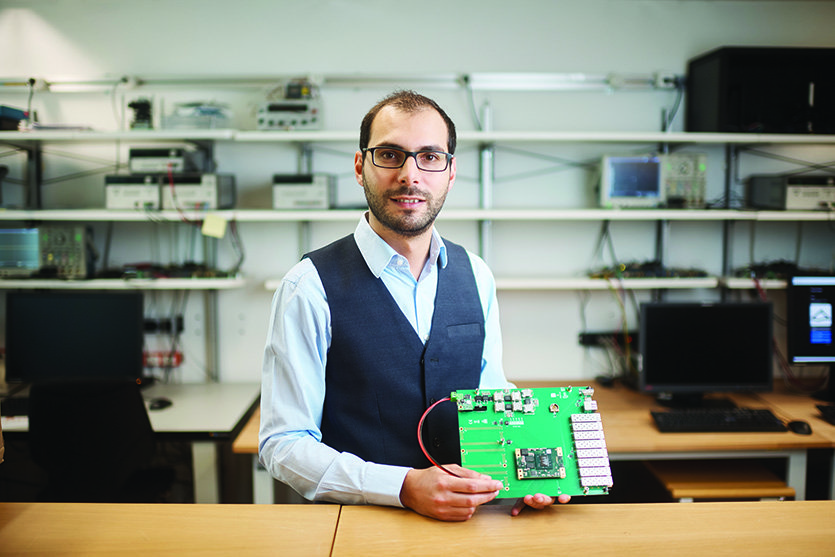

Renato Borges

Monitoring farms using IoT and AI to help farmers reduce costs and increase yields.

China

Yanan Sui

Using artificial intelligence to help paralyzed patients stand up again

Japan

Ken Nakagaki

Inventor develops innovative user interfaces. Fun filled ideas pave the way for new relationships between people and machines.