Artificial intelligence & robotics

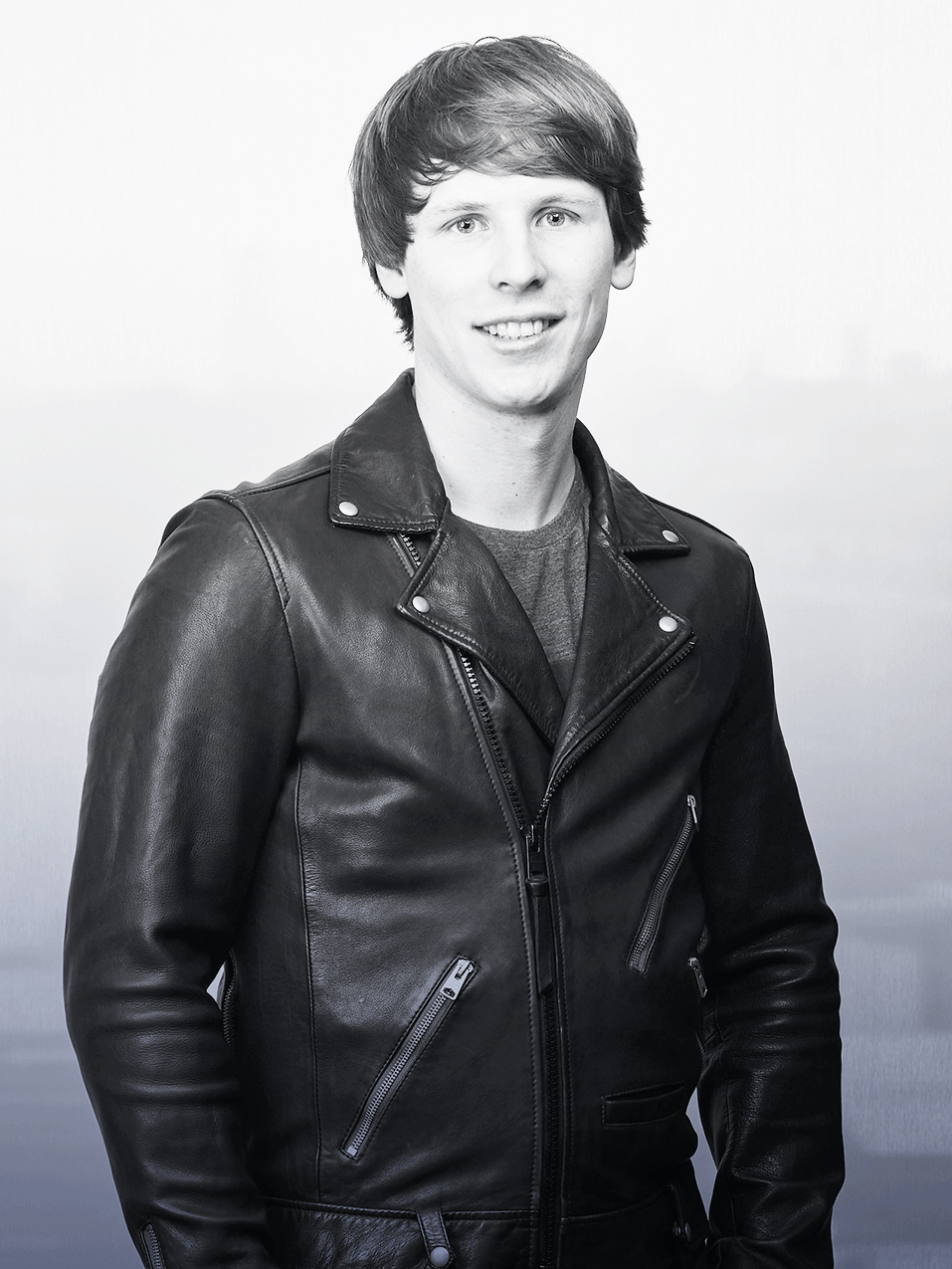

Julian Schrittwieser

AlphaGo beat the world’s best Go player. He helped engineer the program that whipped AlphaGo.

Europe

Samantha Payne

Children without upper limbs are becoming superheroes with the inexpensive, personalised Hero Arms prostheses

Latin America

Antonio Henrique Dianin

His telepresence robot inspired by “The Big Bang Theory” helps health personnel to assist their patients

Latin America

Aline Oliveira

She predicts the performance of family run agricultural farms to facilitate their access to more advantageous loans and reduced financial risk

Latin America

Cristina de la Peña

She can revolutionize advertising and 'marketing' with her technology to analyze, in real time, the reactions of the public to a campaign